The Risks of AI in Federal Workforces: Navigating a Potential Dystopia

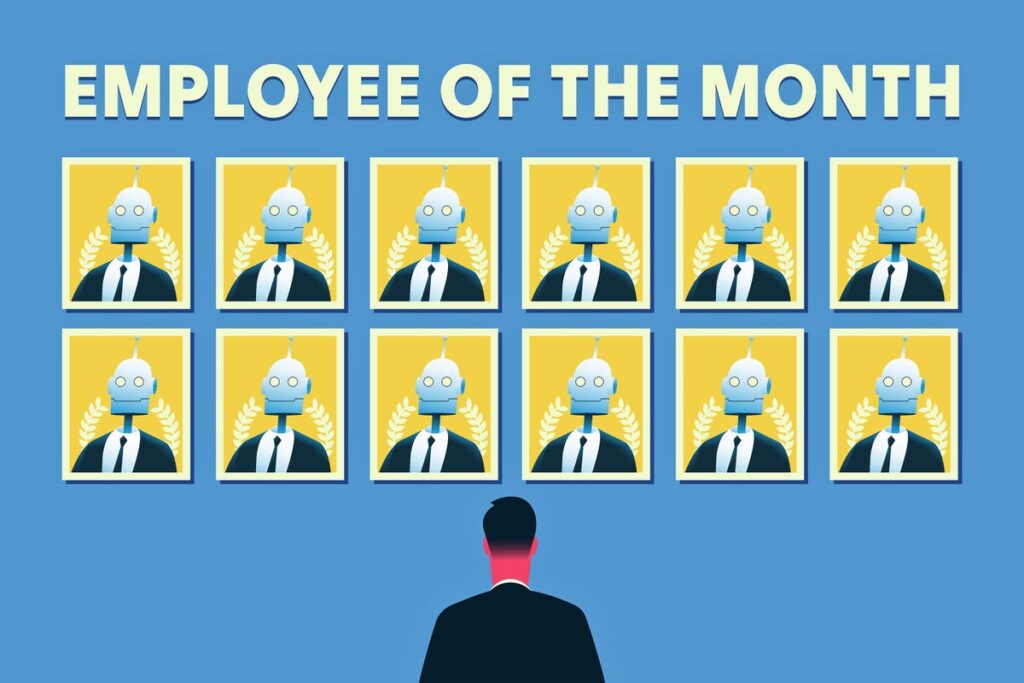

Amidst a surge in enthusiasm surrounding artificial intelligence, proposals to replace human workers in the U.S. federal workforce with automated systems raise significant concerns. The current administration’s push for an AI-driven model could potentially lead to a range of unforeseen complications, particularly regarding the accuracy and reliability of generative AI tools.

AI and the Federal Workforce: A Troubling Proposition

Imagine a scenario where a person calls the Social Security Administration to inquire about their payment status, only to receive a response abruptly stating that all future payments have been canceled. This reflects a significant issue known as “hallucination,” wherein AI-driven systems fabricate responses that diverge drastically from the user’s input.

Such hallucinations are not merely hypothetical scenarios but highlight real concerns tied to generative AI technologies, including those developed by prominent companies like OpenAI and xAI. These systems, while touted for their potential efficiency, are plagued by fundamental flaws that could impair their utility in high-stakes government operations.

The Issue of “Hallucinations”

Historically, speech recognition systems operated with a relatively low incidence of generating nonsensical responses. Earlier iterations produced minor errors in transcription, yet did not typically create entire fabricated narratives. However, advancements in generative AI, such as OpenAI’s Whisper, have revealed troubling trends where erroneous transcriptions include entirely invented content.

Recent studies demonstrated that Whisper could produce nonsensical sentences that lack any foundation in the audio provided. For example, captions that should convey direct speech were found to include fabricated details about individuals, raising ethical and practical concerns about the deployment of such systems in sensitive contexts.

Problems with Current AI Models

The tendency of generative models to create narratives—despite measurement attempts and the use of expansive datasets—poses serious implications for tasks traditionally managed by federal employees. Current generative AI systems strive for broad applicability, but this “one model for everything” approach may dilute their effectiveness.

- Multi-tasking models like ChatGPT and Grok are engineered to perform a wide variety of functions, often at the expense of precision.

- AI systems like Whisper are fundamentally designed to predict the next token of text rather than accurately transcribing speech verbatim, raising the risk of generating misleading information.

The Consequences of AI Missteps

As excitement about AI capabilities grows, the actual performance of these systems raises questions about their implementation within critical federal services. Automated tools that misinterpret or misrepresent information could lead to severe mismanagement in areas such as healthcare, immigration, and public safety.

Replacing skilled federal workers with unreliable generative AI solutions may not only hinder operational efficiency but may also engender significant public distrust. Humans, imbued with expertise and context, provide nuanced decision-making that automated systems cannot replicate.

Moving Forward: Rethinking Automation

In light of these challenges, it is critical to scrutinize any moves towards replacing human workers with machines in federal roles. Reliance on flawed AI systems risks severe repercussions for the American populace, potentially affecting millions.

In conclusion, while AI holds immense potential for enhancing various sectors, the stakes become perilously high in government contexts. Stakeholders must unite to address these challenges, advocating for a cautious approach to AI implementations that prioritize accuracy, reliability, and human oversight.